Extremistan – is not were IS terrorists rule, it’s the fictitious country introduced in N.N. Taleb’s renowned book “The Black Swan:The Impact of the Highly Improbable“. It is some times contrasted against Taleb’s other imaginary country, Mediocristan, to describe the impact of globalization and the internet revolution: In Mediocristan live the traditional providers of individual services, small town shop owners, the craftsmen, i.e. people that either have only a very local market for their goods and basically paid by the hour. A physician may have a very high hourly rate, but their are only 24h per day for him to charge to his customers. A hundred years ago, almost everyone lived in Mediocristan, even the local opera singer or soccer player. People went to the local opera stage and watched the local soccer game. The “market” in Mediocristan was limited to local population so the prospect to make large amounts of money were slim, but on the other hand there was little competition and many in the local community could make a decent living. Now, globalization and the internet, has taken (some of) us to Extremistan. The opera singer, the novelist, the movie star and the soccer player have here a global audience – the potential of making money is vast. However, as also the competition has become tremendous, very few will make “all” the money, whereas the rest can’t make a living. Who want’s to pay to listen to the mediocre, local opera singer, when “the three tenors” are on TV ? Who want’s to pay to see the local soccer team play the team from the other suburb when there is a Champions League game on?

Extremistan – is not were IS terrorists rule, it’s the fictitious country introduced in N.N. Taleb’s renowned book “The Black Swan:The Impact of the Highly Improbable“. It is some times contrasted against Taleb’s other imaginary country, Mediocristan, to describe the impact of globalization and the internet revolution: In Mediocristan live the traditional providers of individual services, small town shop owners, the craftsmen, i.e. people that either have only a very local market for their goods and basically paid by the hour. A physician may have a very high hourly rate, but their are only 24h per day for him to charge to his customers. A hundred years ago, almost everyone lived in Mediocristan, even the local opera singer or soccer player. People went to the local opera stage and watched the local soccer game. The “market” in Mediocristan was limited to local population so the prospect to make large amounts of money were slim, but on the other hand there was little competition and many in the local community could make a decent living. Now, globalization and the internet, has taken (some of) us to Extremistan. The opera singer, the novelist, the movie star and the soccer player have here a global audience – the potential of making money is vast. However, as also the competition has become tremendous, very few will make “all” the money, whereas the rest can’t make a living. Who want’s to pay to listen to the mediocre, local opera singer, when “the three tenors” are on TV ? Who want’s to pay to see the local soccer team play the team from the other suburb when there is a Champions League game on?

The web browser, smartphone and tablet “Apps” clearly meet all the requirements to reside in Extremistan. The technical platforms are highly standardized. The apps or web based services are mostly providing generic infotainment services that address many users with the same needs (or are even willing to adapt there life style to the “app”), they use English and are designed to run everywhere in the world, on all platforms, networks etc. If needed, they can been enhanced by throwing more communication bandwidth and cloud based storage and/or computational capacity at them. In short, Apps and Web services are highly scalable can be used everywhere and most of them have tremendous growth opportunities. At almost zero (marginal) cost they can be provided in an instant and have the potential to reach billions of people. Now doubt, this has made a some people incredible rich, and has lowered the cost dramatically for many services. On the other hand many more have lost their jobs in local bookstores, travel agencies and photo print shops – jobs that were perfectly OK in Mediocristan but no longer exist in Extremistan.

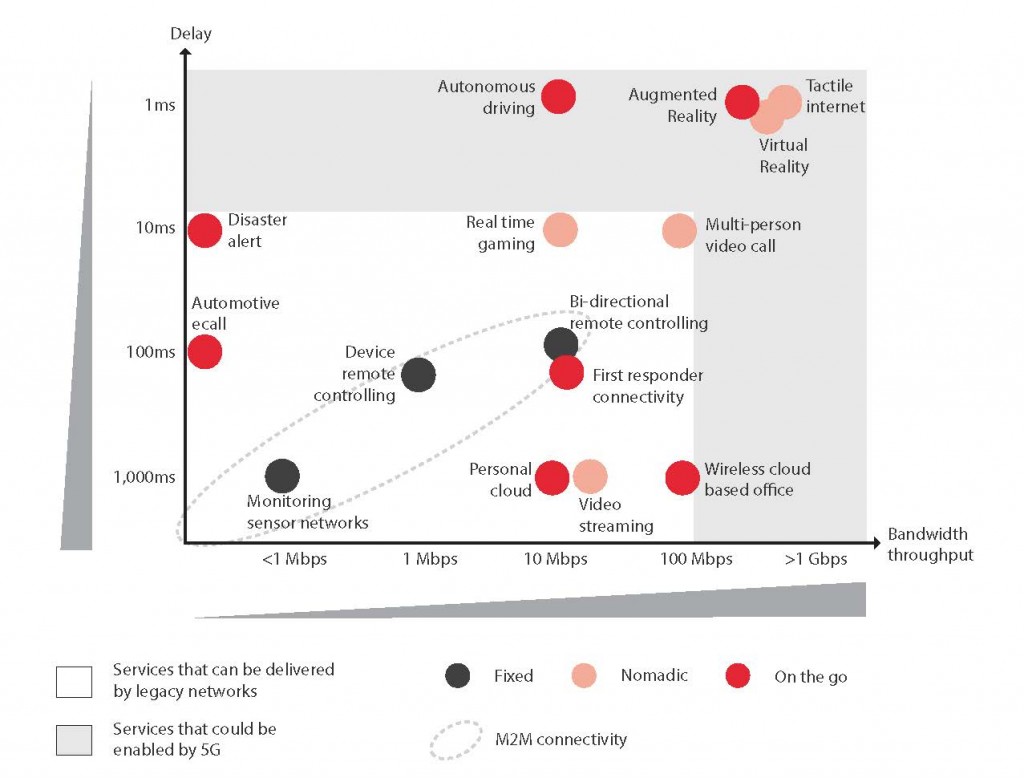

So what about the “Internet-of-things” ? Is there an “exponential” growth in numbers and benefit similar to the infotainment business – or in other words – can the IoT concept exist in Extremistan ? Or is “Internet-of-things” a contradiction-in-terms ? Are we as Gartner predicts just at the top of the hype cycle – heading down into disillusionment? Remember, we have been here before not so long ago (around 2000). So what is fundamentally different today compared to era of the last hype ? Yes, Moore’s law has made a few cycles since, but is that all ?

A key observation is that “things” live in and interact with the physical world. On the “Planet of the Apps”, services run in highly standardized environments in cyberspace – they are by painstaking design removed from the physical world to make them scalable and capable to run everywhere, on any platform. The “Things” are part of cyber-physical systems, they interact with the environment which is mostly different everywhere. They work only “here” and its not obvious how we can leverage Moore’s law to improve their performance by increasing bandwidth, storage or computational power. “IoT-apps” have still to be tailored to specific environments and systems in a “craftsman” fashion, a work that will not be directly applicable anywhere else.

Example – The “Smart” Home: Although it’s obvious how to engineer (at least in principle), say, a home climate control system with sensors and actuators, you will not be able to buy the solution in an app store with a few clicks. As your current home is rather “dumb”, you still have to install sensors and “actuators” in proper places. This is likely to be done by some consultant (system integrator), someone that will adapt and tune the system to your house. The you have to interface with the heating and electrical systems in your house. This may look different in different countries and the installation has for safety reasons to be done by another specialist, the electrician. Who will make the most money in this business ? Its likely to be then consultant and the electrician, hardly some global player like Apple or Google or even the internet operator. Right, the consultant and the electrician have to physically come home to your place and they are paid by the hour, i.e. they both live in Mediocristan. At least for now, they cannot be replaced by some cloud service or some call center in Asia. This has significant impact on the cost of making your old home “smart” – to the extent that very few will see any economic upside, e.g. in saving energy. What about future homes ? In the past homes were built by architects that actually took pride in making every new home different. In the future, new homes might well be produced in a more standardized way, they will have standardized interfaces and behavior the will allow for generic control that can be provided as a global service. This transition has little to do with Moore’s law and other drivers from cyber-space. As most buildings are built to last more than 50 years, it will take many decades until a significant part of our homes meet these requirements.

You can take similar examples from transportation systems and health care. IoT technology certain can provide large benefits in these areas. However, in addition to the differences in physical environment from instance to instance, we have also to deal with the inertia of organizations. All this boils to that most of the time and money spent will be in adaption of systems and organizations, not in sensors, communication or cloud based services. This adaptation unfortunately does not come at the speed of light or at (almost) zero cost. Nevertheless, there are still large savings in efficiency that will drive organizations introduce IoT-technology. This is serious business, however, again the bulk of the money is not likely to be made by global internet players but by “local” system integrators from Mediocristan that know the environment and the business of the customer.

So do all IoT applications live in Mediocristan ? The ones that we discussed above were not scalable due to the diverse environments the operated in. Are there applications that operate in a standardized physical environment that could scale ? The environment that come to my mind is the human body. Our bodies are very similar and have the same functions around the globe and interfacing with “things” should work everywhere in the world. Gadgets attached to hour body related to personal health and fitness are indeed taking off big time – both due to our Western world obsession with health, but also due to their scalability – these gadgets work everywhere and are easily connected to the smartphone platforms and benefit from cloud based services. They are only a (standardized) Bluetooth or WiFi connection away from cyberspace. In professional healthcare, however, there may still be organizational barriers as some decisions still have to be taken by physicians from Mediocristan.

Other examples that look promising include the car industry. Although there is a lot of activity in “connected cars“, standardized platforms that allow global scaling, are conceivable, but still far away. Is the car industry really interested going down this risky path or do they aim at containing and controlling the technology only for their purposes ? A striking resemblance is the mobile phone industry before the iPhone and the App Store. “Yes, mobile services, but at our terms”, are some famous last words.

In summary, my take on the “IoT revolution” is:

- Yes, it will happen, but much slower than we expect/hope. The key economic incentives are in making big systems more efficient. In big systems, the upside is usually big so we can afford to build them in Mediocristan. The “internet industry” plays a “sidekick” role as they do not know anything about the actually applications.

- Yes, smart homes, controlling your heating, sensors everywhere, connecting your flowerpot and refrigerator – it can all be done, you can already buy all the gadgets, but actually doing something sensible with them requires today craftsman’s skills – the “internet scalabilty” is not there and it will be tough to reach an attractive price point for consumers. Unless you are a geek, there is dire need for a “killer app” to pay for it all.

- Closest to Extremistan are personal “things” on your body .. and possibly your car if the car industry is really interested.